👉Try Build Agents with LLaMA 3.3 ThunderAI on Regolo for free

The OpenAI Agents SDK is an incredibly powerful framework for building reasoned, multi-agent workflows. But usually, using it means you’re stuck paying for GPT-4 and sending your data to a closed ecosystem.

Developers want the convenience of the Agents SDK—structured handoffs, built-in memory, easy tool use—but with the privacy, cost-control, and flexibility of open models like LLaMA 3.3.

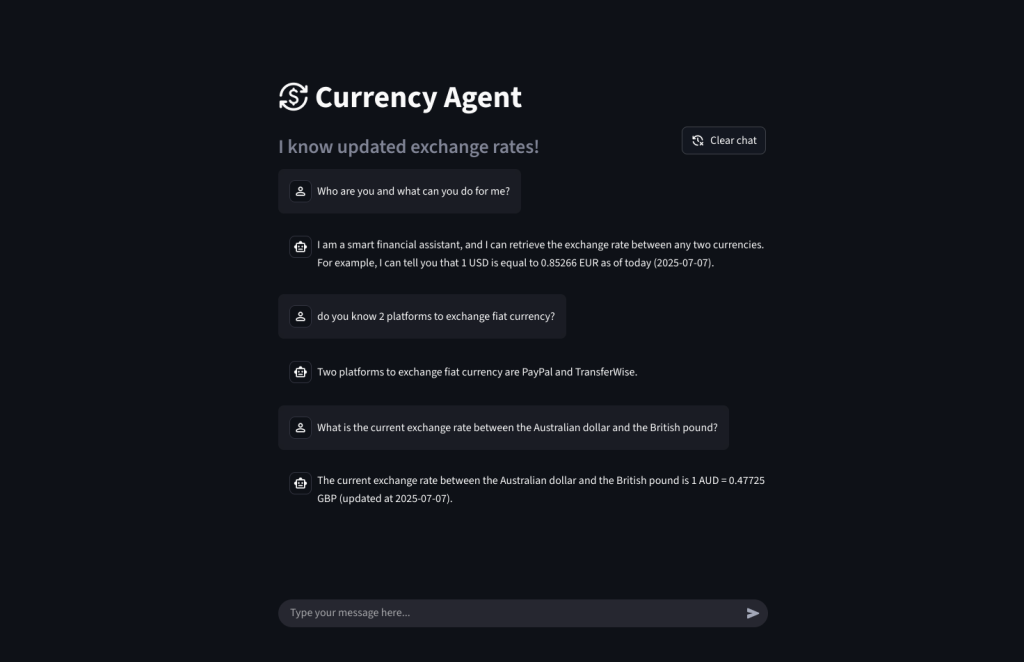

Connect the official OpenAI Agents SDK to LLaMA 3.3 (via Regolo) in under 10 minutes. Build a currency bot with function calling that runs on open weights and European infrastructure.

Outcome

- Open Model Power: LLaMA 3.3 is optimized for function calling, making it a drop-in replacement for GPT-4o in agentic workflows.

- SDK Compatibility: You don’t need to rewrite your agent logic. Regolo’s API is 100% OpenAI-compatible, so the SDK just works.

- Privacy & Control: Keep your agent’s reasoning traces and data inputs within the EU (Regolo’s green cloud), avoiding US data residency issues.

Prerequisites (Fast)

- Regolo API Key: From your dashboard.

- Python 3.10+: pip install openai-agents openai streamlit.

- LLaMA 3.3: Supported natively on Regolo.

Step-by-Step (Code Blocks)

1) Configure the Regolo Provider

The Agents SDK allows custom model providers. We’ll define one that points to Regolo.

from openai import AsyncOpenAI

from agents import ModelProvider, OpenAIChatCompletionsModel, Model

class RegoloProvider(ModelProvider):

def __init__(self):

self.client = AsyncOpenAI(

base_url="https://api.regolo.ai/v1",

api_key="YOUR_REGOLO_KEY"

)

def get_model(self, model_name: str | None) -> Model:

# Default to Llama-3.3 if no model specified

return OpenAIChatCompletionsModel(

model=model_name or "Llama-3.3-70B-Instruct",

openai_client=self.client

)

REGOLO_PROVIDER = RegoloProvider()Code language: Python (python)2) Define a Real Tool

LLaMA 3.3 excels at tool use. Let’s create a live currency converter using httpx.

from agents import function_tool

import httpx

@function_tool

async def get_exchange_rate(base: str, target: str) -> str:

"""Get the current exchange rate between two currency codes (e.g., USD, EUR)."""

url = f"https://api.frankfurter.dev/v1/latest?base={base.upper()}"

async with httpx.AsyncClient() as client:

resp = await client.get(url)

if resp.status_code != 200:

return "Error fetching rates."

rate = resp.json().get("rates", {}).get(target.upper())

return f"1 {base} = {rate} {target}" if rate else "Currency not found."Code language: Python (python)3) Create the Agent

Instantiate the agent with our tool and Regolo provider instructions.

from agents import Agent, Runner, RunConfig

agent = Agent(

name="CurrencyBot",

instructions="You are a helpful financial assistant. Use tools to check rates.",

tools=[get_exchange_rate]

)

# Test run

async def main():

result = await Runner.run(

agent,

"How many Euros can I get for 100 US Dollars?",

run_config=RunConfig(model_provider=REGOLO_PROVIDER)

)

print(result.final_output)

# asyncio.run(main())Code language: Python (python)Expected output: The agent calls the tool, calculates the total (100 * rate), and answers naturally.

4) Build a Streamlit UI

Wrap it all in a chat interface for a real demo experience.

import streamlit as st

import asyncio

st.title("🇪🇺 Regolo Currency Agent")

user_msg = st.chat_input("Ask about exchange rates...")

if user_msg:

with st.spinner("Agent is reasoning..."):

# Run the agent with our custom provider

result = asyncio.run(Runner.run(

agent,

user_msg,

run_config=RunConfig(model_provider=REGOLO_PROVIDER)

))

st.write(result.final_output)Code language: Python (python)Production-Ready: Agent Patterns

The power of the Agents SDK is Handoffs. You can create a “Triage Agent” that routes queries to a “Finance Agent” (LLaMA 3.3) or a “Coding Agent” (Qwen 2.5-Coder), all running on Regolo.

finance_agent = Agent(name="Finance", tools=[get_exchange_rate])

triage_agent = Agent(

name="Triage",

handoffs=[finance_agent],

instructions="Route financial questions to the Finance agent."

)Code language: Python (python)This multi-agent pattern works seamlessly with Regolo’s open models.

Benchmarks & Costs

| Feature | Regolo (LLaMA 3.3) | GPT-4o |

| Tool Calling | Reliable. Zero-shot JSON schema adherence. | Excellent. |

| Privacy | Zero Retention. | Standard retention. |

| Cost | Significantly Lower. (~$0.70/1M tokens). | ~$2.50+/1M tokens. |

| Ecosystem | Open. Agents SDK works without lock-in. | Closed. |

👉Try Build Agents with LLaMA 3.3 ThunderAI on Regolo for free

Resources & Community

Official Documentation:

- Regolo Platform – European LLM provider, Zero Data-Retention and 100% Green

Related Guides:

Join the Community:

- Regolo Discord – Share your automation builds

- CheshireCat GitHub – Contribute plugins

- Follow Us on X @regolo_ai – Show your integrations!

- Open discussion on our Subreddit Community

🚀 Ready to Deploy?

Get Free Regolo Credits →

Built with ❤️ by the Regolo team. Questions? support@regolo.ai